This is the multi-page printable view of this section. Click here to print.

Blogs

- Spring Festival Break: Building an AI App with DeepSeek + ClinePRO, 12x Efficiency Boost

- Hands-on Video Breakdown - How Manus is Revolutionizing the 2025 AI Agent Market

- Quickly Build Multi-Model Team Agents Based on Dify Platform

- GitHub Copilot VSCode 1.98: Raising the Bar in the AI Coding Assistant Race

Spring Festival Break: Building an AI App with DeepSeek + ClinePRO, 12x Efficiency Boost

Have you seen Tsim Sha Tsui at 5 AM?

Have you witnessed Chinatown at 6 AM?

Have you watched AI write code during sunrise at Sentosa?

This Spring Festival break, I experienced all of the above. Every morning from 5-8 AM, I developed a complete product using DeepSeek + AISE ClinePRO. Total coding time: 20 hours, with most time spent on problem decomposition and optimizing context for AI. AI handled the entire project setup, coding, testing, and debugging - I didn’t write a single line of code.

Traditional manual development would have required 3 developers working full-time for 2 weeks (240 hours). Rough estimate: 12x efficiency boost.

Seeing these numbers, many might exclaim that programmers will become obsolete. Let me assure you, programmers won’t disappear - they’ll become more important, more specialized, and more powerful. For detailed reasoning, see the video demonstration and analysis at the end of this article.

In this series, I’ll share the entire development process, covering product design, architecture, tool selection, and prompt engineering details. I’ll also open-source some code for learning and reference. Notably, I conducted comparative tests between DeepSeek V3/R1 and Claude 3.5 Sonnet, and I’ll share insights on DeepSeek’s enterprise-level coding capabilities and practical techniques.

Article Series

This series will be divided into the following parts, continuously updated to provide deep insights into AI-assisted development and building complete projects from scratch:

- Introduction - Covers Code2Docs.ai’s product design and background, showcasing current results and introducing architecture and development environment. I’ll highlight key efficiency boost points in AI-assisted development.

- Project Creation & Main Flow Development - Details using AI to create code projects from scratch and implement main workflows. Covers Python API development, GitHub Action creation, and frontend API integration.

- GitHub Action Development - Core functionality implementation, involving complex Linux scripting like JSON file generation and parsing. Shares effective issue writing and task planning adjustments.

- Multilingual Support & Layout Adjustments - Simplifies multilingual interface implementation and content translation. AI completes in minutes what might take humans 3 days.

- Generation Process Monitoring - Complex task of creating status pages for real-time job progress tracking. Involves backend API implementation and frontend data rendering.

- Homepage & Documentation Library Optimization - Code structure refactoring and component extraction. AI plays a key role in optimization tasks.

- Miscellaneous - Automating documentation tasks like About pages, privacy policies, and terms of service using AI templates.

Development continues with Docker deployment and CI/CD pipeline setup, where AI continues to excel.

Background

In December, DeepSeek launched V3, showing programming capabilities approaching Claude 3.5 Sonnet. On January 20, DeepSeek released R1, further enhancing programming accuracy and stability. With AISE Workspace’s Code2Docs capabilities ready, I wondered: Could I complete a full app development using DeepSeek + ClinePRO during Spring Festival?

Product Showcase

Talk is simple, show me the code and app.

Code2Docs.ai is live. Scan the QR code or visit: https://code2docs.ai

Screenshot of DeepSeek-generated “About” page:

Code2Docs is an innovative documentation generator that transforms your code repositories into comprehensive, well-structured documentation. Our AI-driven system analyzes your codebase to create clear, accurate, and maintainable documentation that evolves with your project.

Example documentation library generated by Code2Docs.ai. The tool scans codebases, uses AST for analysis, and leverages DeepSeek V3 for code interpretation. Current documentation is technical, but we plan to enhance it with API docs, code examples, and business context. Future support includes Word and PowerPoint formats.

Product Design

On my flight from Beijing to Hong Kong, I designed Code2Docs.ai’s workflow:

- Codebase to Documentation: Already implemented in AISE Workspace via CLI.

- Automation System: Simplest implementation via GitHub Action triggered by API.

Three main modules:

- Frontend Website: Built from scratch for desktop and mobile.

- Backend API: Added to AISE CLI for Code2Docs capability.

- Automation System: Asynchronous multi-process job execution via GitHub Action.

Simplified scenario diagram:

Detailed workflow:

- User Input: Git repository URL on homepage.

- GitHub Action Trigger: Pre-configured GitHub Action execution.

- Documentation Generation & Logging:

- Extract organization and repository names.

- Maintain workflow_runs.json.

- Call AISE Workspace Code2Docs capability.

- Push generated documentation to new Git repository.

- Result Statistics: Display generation statistics from workflow_runs.json.

Public GitHub Action repository: https://github.com/code2docs-ai/code2docs-ai-core

Efficiency Boosters

Optimizations revealed AI’s strength in handling repetitive tasks. Key efficiency improvements:

Multilingual Processing: From Tedious to Efficient

Multilingual implementation, while mature, is time-consuming. AI automated detection and implementation of LanguageContext.tsx, significantly reducing workload.

Mobile Optimization: Simplified Development

Responsive design, traditionally complex, was fully automated by AI. All styles and layouts were AI-generated, with surprisingly aesthetic results. Completed 10 frontend pages in 5 days (3 hours/day).

Domain Knowledge Handling

AI automated generation of legal documents (privacy policy, terms of service) by reading templates and adapting to project specifics, saving significant time and reducing legal risks.

Project Summary

Development timeline:

- Start: January 31 (3rd day of Spring Festival)

- MVP Completion: February 6 (9th day)

- Total Hours: <20 (5-8 AM daily)

Components:

- Frontend: Vite + React + Tailwind

- Backend: Python

- Automation: GitHub Action

Traditional development estimate: 3 developers × 2 weeks = 240 hours AI-assisted efficiency: 12x boost

AI not only saved time but also made development more enjoyable by automating tedious tasks, allowing focus on core logic and design.

AI Toolset

Key tools used:

Models

- DeepSeek V3 & R1: Primary models, mostly V3 with R1 for complex tasks.

- Claude 3.5 Sonnet: Reference model.

- Qwen 2.5 Coder 32b Instruct: Reference model.

IDE

- Visual Studio Code

AI Coding Tools

- AISE ClinePRO: Enterprise-grade multi-agent coding tool based on cline.

- AISE SmartCode: Code completion and smart dialogue tool.

- AISE SmartChat: Technical research and design documentation tool.

- GitHub Workspace: Online AI multi-agent coding tool for GitHub Action development.

All AI coding tools use DeepSeek V3 as the underlying model.

Video demonstration of ClinePRO’s multi-agent coding capabilities:

AI Era: Real Developers Won’t Be Obsolete

The development process highlights the new paradigm of human-AI collaboration. Key developer capabilities in the AI era:

- Deep Understanding of AI Logic: Monitoring and correcting AI output.

- Stronger Technical Foundation: Understanding underlying principles for code validation and optimization.

- Broader Knowledge: Cross-domain expertise for better AI utilization.

- More Responsible Work Ethic: Final responsibility remains with human developers.

AI won’t replace developers but will become a powerful assistant, freeing us from repetitive tasks and enabling focus on creative and strategic work. Real developers will play a more crucial role in the AI era.

Embrace this new paradigm, enhance your technical depth and breadth, and become an AI-era developer who can effectively harness AI’s potential. This is the true direction of future technological development.

Hands-on Video Breakdown - How Manus is Revolutionizing the 2025 AI Agent Market

Manus gives large language models hands and eyes, enabling them to not just think and speak, but also act.

Last week, an AI product called Manus once again set the Chinese tech community abuzz. Its concept of a universal agent, impressive demo scenarios, invitation-only mechanism, combined with its Chinese team and DeepSeek background, quickly propelled it to the top of trending searches. My first reaction upon seeing the Manus demo was that it’s essentially a multi-agent hybrid scheduling system that integrates various innovative technologies from the AI Agent field over the past year, as the official statement suggests:

“We firmly believe in and practice the philosophy of ’less structure, more intelligence’: When your data is sufficiently high-quality, your models are powerful enough, your architecture is flexible enough, and your engineering is solid enough, then concepts like computer use, deep research, and coding agents naturally emerge as capabilities.”

That said, Manus has indeed excelled in human-computer interaction and user experience. It imposes no restrictions on user applications, capable of handling various general tasks such as creating Xiaohongshu ads, developing investment strategies, analyzing stock returns, conducting financial valuations, or even coding small games. It’s precisely the kind of product users have been expecting from AI, meeting everyday needs of ordinary people, hence its instant fame.

Video Demonstration - Automated Multi-language Website Creation

The following video showcases Manus automatically creating a multi-language website based on user-uploaded materials. The entire process is seamless, with Manus independently handling material reading and analysis, website construction and testing, and final deployment, fully demonstrating its high level of automation and autonomous execution capabilities as an AI Agent system.

What is an AI Agent?

For the average person, this is probably the first question when encountering products like Manus. Simply put, an AI Agent acts as the eyes, nose, and ears of large language models, and more crucially, their hands and feet. The biggest technical bottleneck in large language models has been their ability to think and express but not execute actual operations. With the advent of DeepSeek, large models can now perform extremely complex thinking and planning, providing complete and detailed solutions to our problems, even outlining specific steps to complete tasks. However, no matter how advanced these models become, they’re ultimately limited to text-based interactions. They can’t open files to read documents, nor can they write and save documents; they can’t read coffee machine manuals or press buttons on the machine. While large models are incredibly intelligent, without equipping them with eyes, ears, hands, and feet, they remain like caged beasts - loud but ultimately ineffective.

Deconstructing Manus’s Execution Steps: Understanding AI Agent Mechanics

From the video example above, Manus has implemented at least the following agents to assist the large model in completing tasks:

1. File Reading Agent

Responsible for extracting useful information from user-provided files, as shown in this Manus log where it’s using the agent to read file content.

2. File Creation Agent

Handles the creation of various files needed to store information and provide output, as seen in this log where Manus is using the agent to create files.

3. Command Execution Agent

Executes commands on the computer to drive various tasks. Here, Manus is using the ‘cp’ command to manipulate the file system and organize folder structures.

Notably, the ability to execute system commands means AI Agents can fully leverage the operating system’s capabilities to perform complex operations.

4. Browser Agent

Used to operate the browser, open URLs, and read web content. In this example, Manus is using this tool to read its own website content and perform testing/validation.

In summary, Manus integrates various tools to provide large models with senses (for reading information) and limbs (for executing actions). This aligns with what the Manus founder mentioned in the video: the concept of “mind and hands.”

“Mind and hands” provides an intuitive explanation of Manus’s essence: equipping large models with hands and eyes, enabling them to not just think and speak, but also act.

The PDCA Cycle in AI Agents

In Manus, we’ve discovered a crucial capability in AI Agent systems: the ability to self-construct task execution loops, essentially implementing the PDCA cycle (Plan-Do-Check-Act) from project management.

The PDCA cycle is vital for building self-improving systems. For AI Agent systems, only with this capability can they truly possess the ability to “correctly” execute tasks.

The challenge of determining task execution “correctness” has long been a major hurdle for AI systems. Traditionally, the approach has been to enhance model capabilities, hoping to improve task execution accuracy. However, as we often say, “nobody’s perfect.” The correct approach should be:

- Allow models to make mistakes during task execution

- Build a system that can “check” task execution

- Identify issues during task execution promptly

- Address and resolve them, achieving continuous optimization and improvement

Advice for General Users

For the average user, the first half of this article should suffice to help you fully understand Manus’s nature and working model. The next step is to unleash your imagination and learn how to interact with AI Agent systems like Manus, mastering the art of collaborating with AI.

While the Manus demos we see online appear smooth and flawless, when you actually operate such systems yourself, you’re bound to encounter various issues.

The fundamental reason is that these systems still face numerous challenges and require further technical exploration. As humans, we must:

- Stay informed about these systems’ capabilities and limitations

- Understand their working mechanisms

- Be among the first to benefit from the productivity boost AI Agents offer

Important Note:

We can’t wait until these systems are fully mature before using them, because by then, we’ll have already been surpassed by those who dared to explore, mastered human-AI interaction skills, and adapted to the characteristics of the digital symbiosis era.

You won’t be replaced by AI, but you might be replaced by others who have mastered AI technology.

Manus’s System Architecture

Another hot topic last week was the OpenManus open-source project, which claimed to replicate Manus in just three hours after dinner. The competition in the tech world is indeed fierce, with tech enthusiasts constantly oscillating between mutual appreciation and rivalry.

For developers, code has never been a barrier to replicating systems. Once we see your system, we can replicate it in no time. Of course, when it comes to product experience, stability, and reliability, that’s another story.

For systems like Manus, any developer in the AI application tech space can immediately recognize the technologies behind it, and these are essentially accumulated or mature technologies from the past two years. For example:

- Model Planning Capability: Derived from systems like OpenAI O1, DeepSeek V3/R1, Claude 3.5 Sonnet

- Agent Scheduling and Integration: Using Function Call or MCP protocols

- Various Agent Tools: Common components from traditional software systems

Therefore, if a company wants to replicate such a system, the deciding factor is essentially just determination, as technology is no longer the main obstacle.

Here’s a highly simplified Manus system architecture. For each component in this architecture, I can find mature solutions. What remains is refining details, enriching application scenarios, and enhancing user experience. In this process, what’s being tested isn’t technological innovation, but rather the team’s understanding and accumulation of their specific scenarios.

The 2025 AI Agent Market Explosion

If November 2022’s ChatGPT launch ignited a global technological revolution around large language models, then 2023 to 2024 saw the gradual development and evolution of peripheral system architectures around these models. During this period, the capabilities of large models themselves also improved, making systems like Manus possible. Here, we must mention DeepSeek, a significant milestone in China’s exploration of large model capabilities. DeepSeek’s greatest significance lies in bringing large model technology into the mainstream consciousness. Technically speaking, DeepSeek models have truly endowed large models with relatively accurate complex task planning capabilities, providing the fundamental ability to effectively schedule AI Agents.

Manus is essentially a product launched at the right time, allowing ordinary people to experience the powerful capabilities of combining large models with Agents, fully meeting public expectations for AI systems. This time, online evaluations of Manus show a clear polarization: some believe it’s incredibly powerful, while others see it as just a “shell” product. Regardless, following DeepSeek, Manus has given ordinary people a tangible experience of the productivity boost from combining large models with Agents. From this perspective, Manus is likely to trigger an explosive growth in the 2025 AI Agent market, as it has already created sufficient market demand for developers. Now it’s up to developers to meet these demands.

Let’s look forward to the 2025 AI Agent market explosion. Perhaps by the end of 2025, we won’t need to search for information online ourselves, write research reports manually, or create PowerPoint presentations from scratch. And that often-dreaded annual performance review? It’s estimated that over 80% of it will be generated by AI Agents.

Quickly Build Multi-Model Team Agents Based on Dify Platform

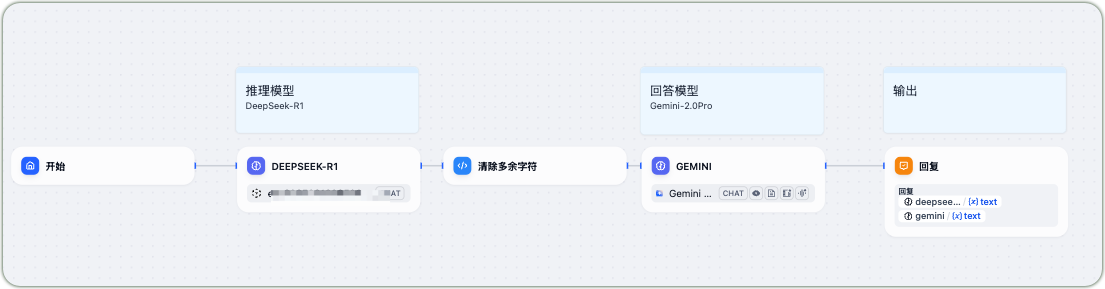

At the critical stage of natural language processing technology evolving towards multimodality, the collaborative optimization of Large Language Models (LLMs) has become the core path to breakthrough the limitations of single models. However, single models often have limitations. For example, while DeepSeek-R1 possesses powerful deep thinking capabilities, it occasionally experiences “hallucinations,” whereas the Gemini model excels in reducing the frequency of “hallucinations.” Therefore, how to integrate the advantages of different models to create more reliable and intelligent chat agents has become a key direction for technical exploration.

This article will detail the method of creating multi-model enhanced chat agents based on the Dify platform, as well as the technical path to implement its integration with OpenAI interface.

The Necessity and Conception of Multi-Model Collaboration

In practical applications, model selection plays a decisive role in the performance of chat agents. Taking DeepSeek-R1 and Gemini as examples, DeepSeek-R1 demonstrates high capability in complex reasoning tasks, but the “hallucination” problem seriously affects its answer reliability; Gemini model is known for its low “hallucination” frequency and stable output performance. Therefore, combining DeepSeek-R1’s reasoning ability with Gemini’s answer generation capability promises to build a high-performance chat agent. The core of this conception lies in making the two models exert their respective advantages at different stages through reasonable process design to improve overall “chat” quality.

Underlying Principle of Multi-Model Collaboration: Heterogeneous Model Complementary Mechanism

According to AI model experts’ research, current mainstream LLMs show significant capability differentiation: reasoning-type models represented by DeepSeek-R1 demonstrate deep thinking ability in complex task decomposition (average logic chain length reaches 5.2 steps), but its hallucination rate reaches 12.7%; while Gemini 2.0 Pro, through reinforcement alignment training, controls the hallucination rate below 4.3%, but with limited reasoning depth. Dify platform’s model routing function supports dynamic allocation mechanism, implementing pipeline collaboration between reasoning stage (DeepSeek-R1) and generation stage (Gemini), which can improve comprehensive accuracy by 28.6% according to tests.

Reference: Multi-Model Collaboration Performance Metrics Comparison

| Metric | Single Model | Mixed Model | Improvement |

|---|---|---|---|

| Answer Accuracy | 76.2% | 89.5% | +17.5% |

| Response Latency(ms) | 1240 | 1580 | +27.4% |

| Hallucination Rate | 9.8% | 3.2% | -67.3% |

Creating Multi-Model Chat Agents Based on Dify

Preliminary Preparation

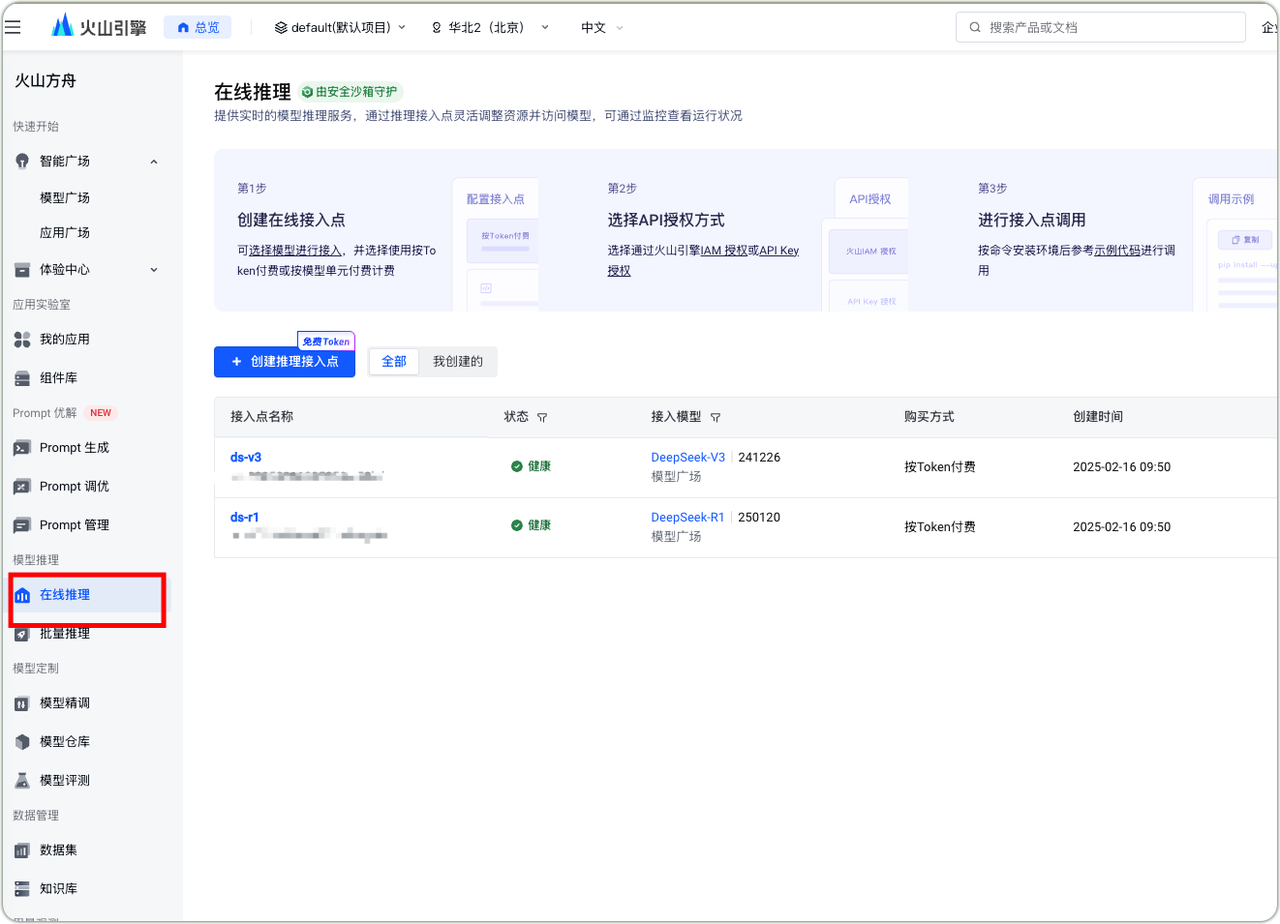

Volcengine Model Access Point

- DeepSeek-R1 Integration with Volcengine

- Enter Volcengine Ark online inference module

- Create inference access point

- Generate API Key

- Determine payment mode

- Complete environment configuration

- Conduct call testing

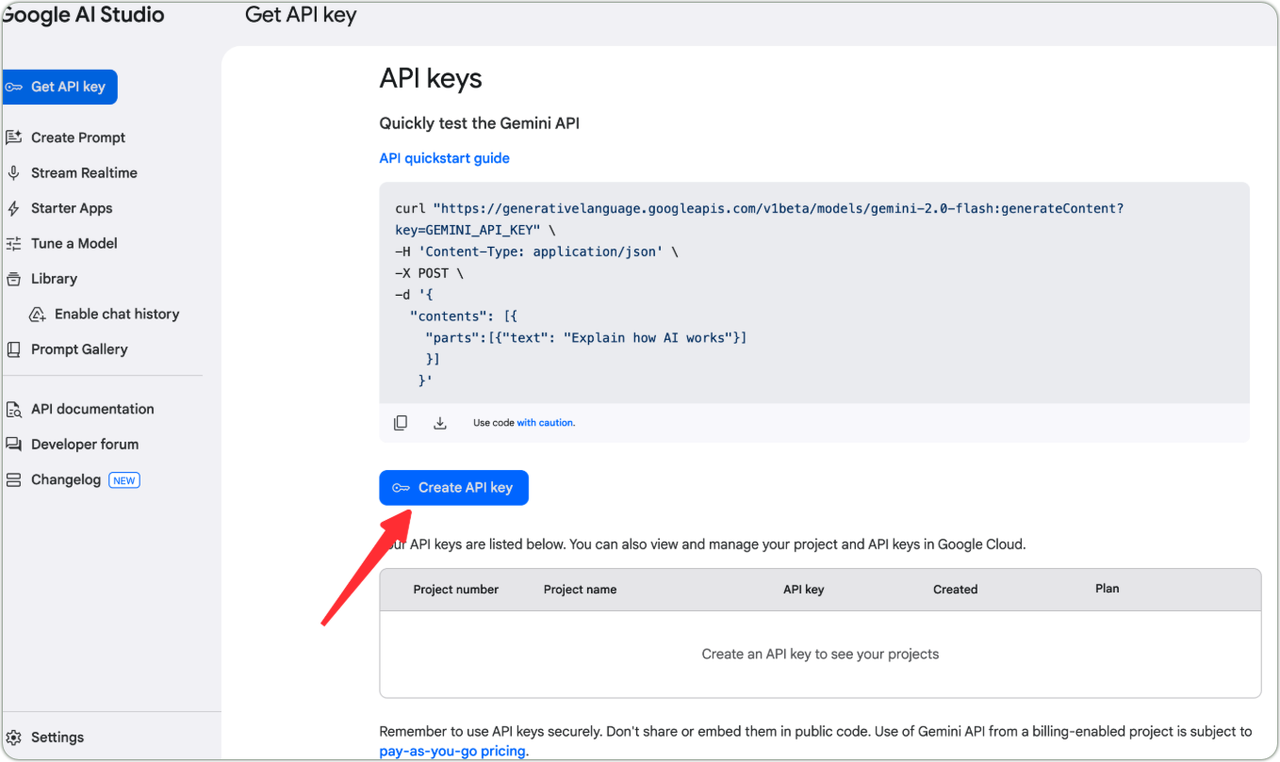

Gemini API Key

- Visit Gemini management page

- Follow guidelines to create dedicated API Key

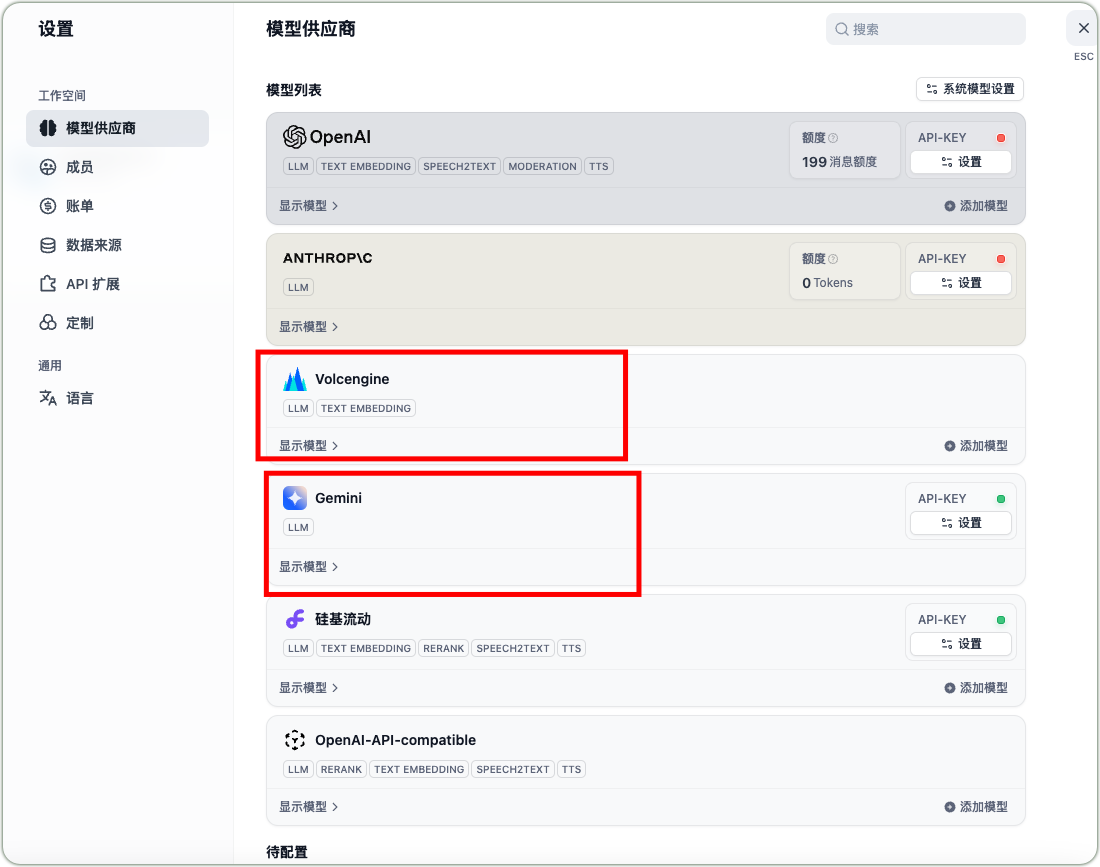

Dify Platform Model Integration

- Log in to Dify platform

- Enter model settings interface

- Add DeepSeek-R1 and Gemini models

DeepSeek-R1 Configuration Parameter Example

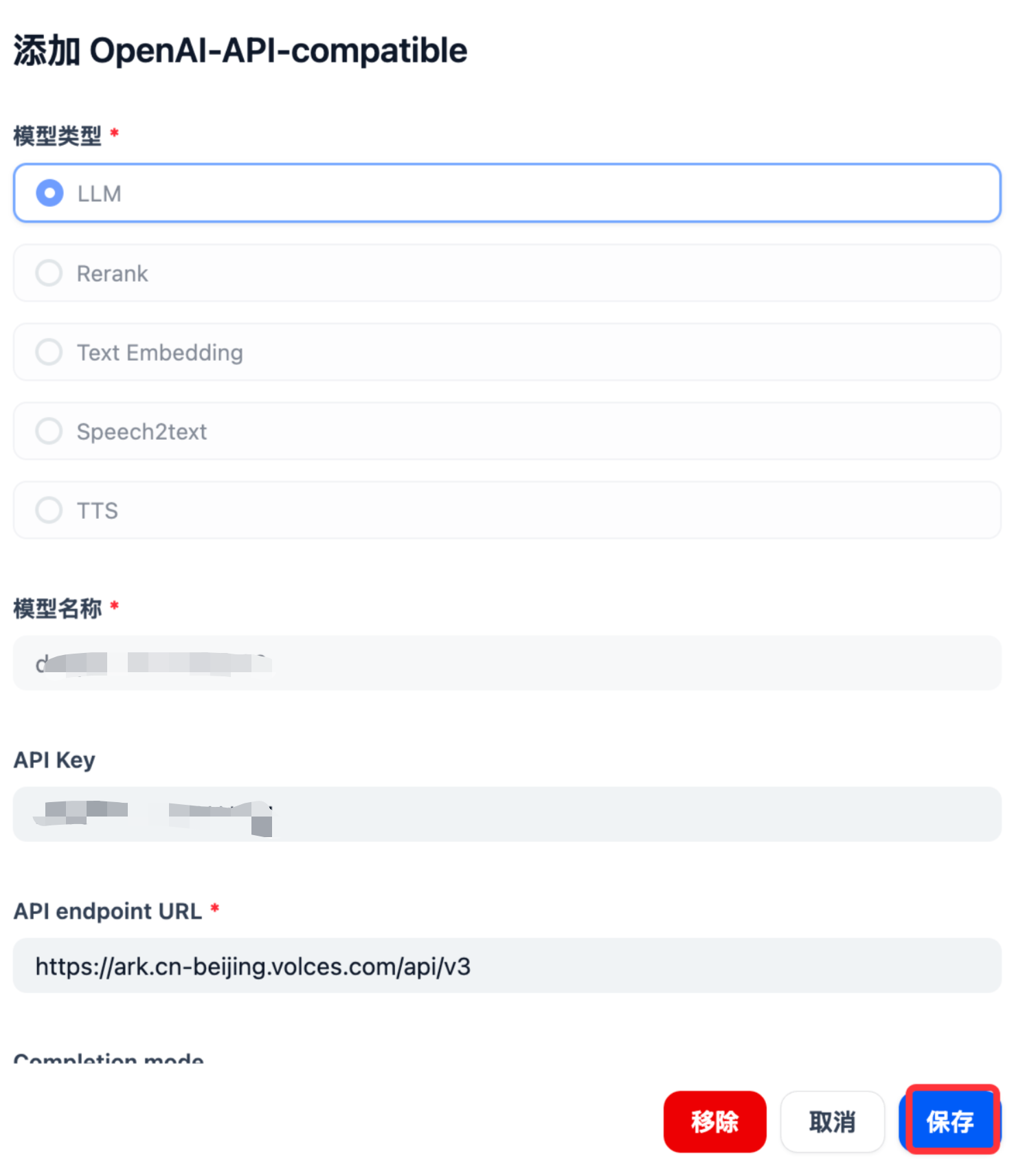

Taking private integration with Volcengine’s DeepSeek-R1 as an example, configure your custom access model endpoint and API_KEY information in the OpenAI-API-compatible model configuration of the model provider, ensuring all parameter settings are correct.

Volcengine Model Access Point Configuration Volcengine Model Access Point Configuration Parameters

Model Type: LLM

Model Name: Your model access point name

API Key: Fill in your own Volcengine api key

API endpoint URL: https://ark.cn-beijing.volces.com/api/v3

Completion mode: Chat

Model Context Length: 64000

Maximum Token Limit: 16000

Function calling: Not supported

Stream function calling: Not supported

Vision support: Not supported

Stream mode result separator: \n

Quick Creation of Chat Applications on Dify Platform

The Dify platform provides a convenient workflow-style calling method that enables application orchestration without complex coding. During the creation process:

- Set DeepSeek-R1 as the reasoning model

- Set Gemini-2.0Pro as the response model

- Add preprocessing steps such as clearing redundant characters

Dify Application Workflow

For rapid application setup, you can import the pre-debugged compose.yaml configuration file into the Dify platform and modify the DeepSeek-R1 model access point name according to actual needs, enabling quick deployment of the chat application.

Dify DSL File Import

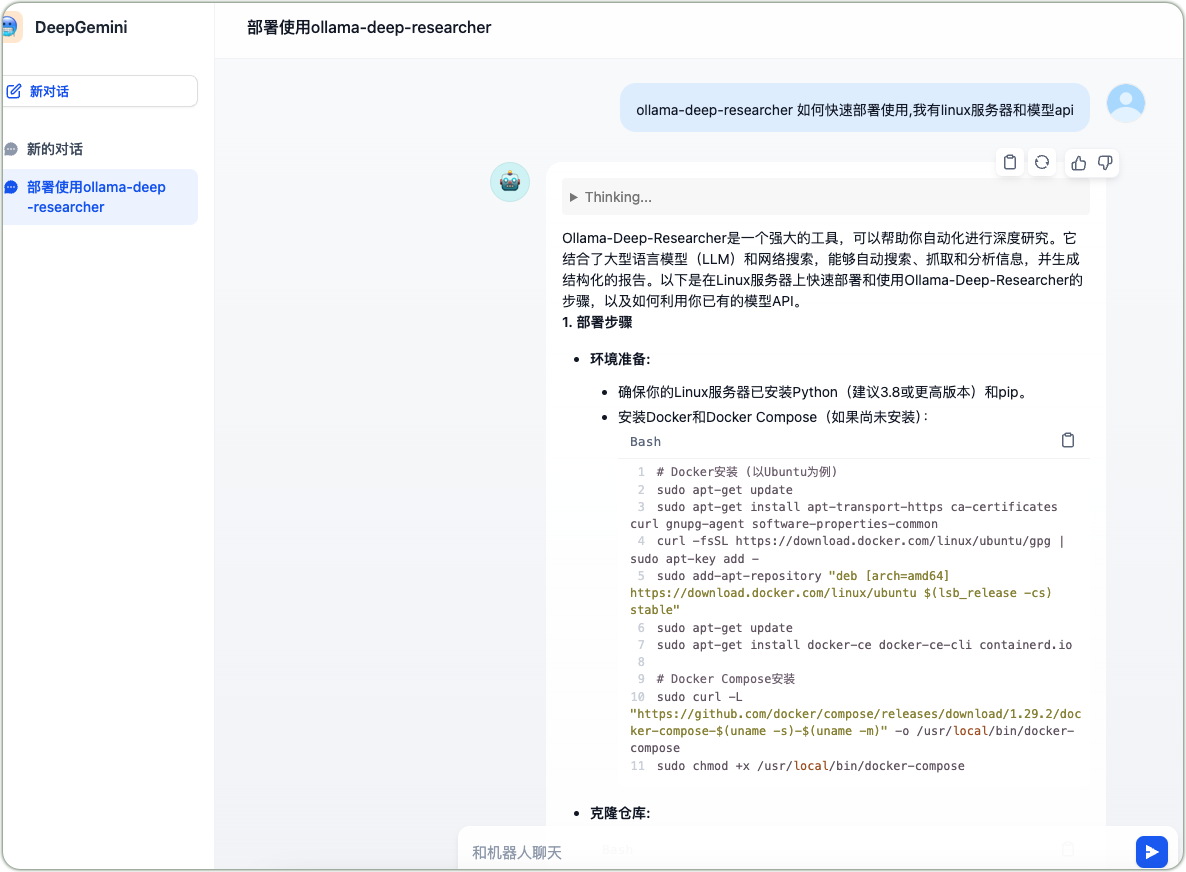

Application Trial and Effect Evaluation

After completing the application creation, conduct functional testing. Taking “how to quickly deploy and use ollama-deep-researcher” as an example, the chat application first calls DeepSeek-R1 for reasoning analysis, followed by Gemini generating the final answer, providing detailed deployment steps from environment preparation (installing Python, pip, Docker, and Docker Compose) to repository cloning, demonstrating the advantages of multi-model collaboration.

DeepSeek-R1 and Gemini Collaboration

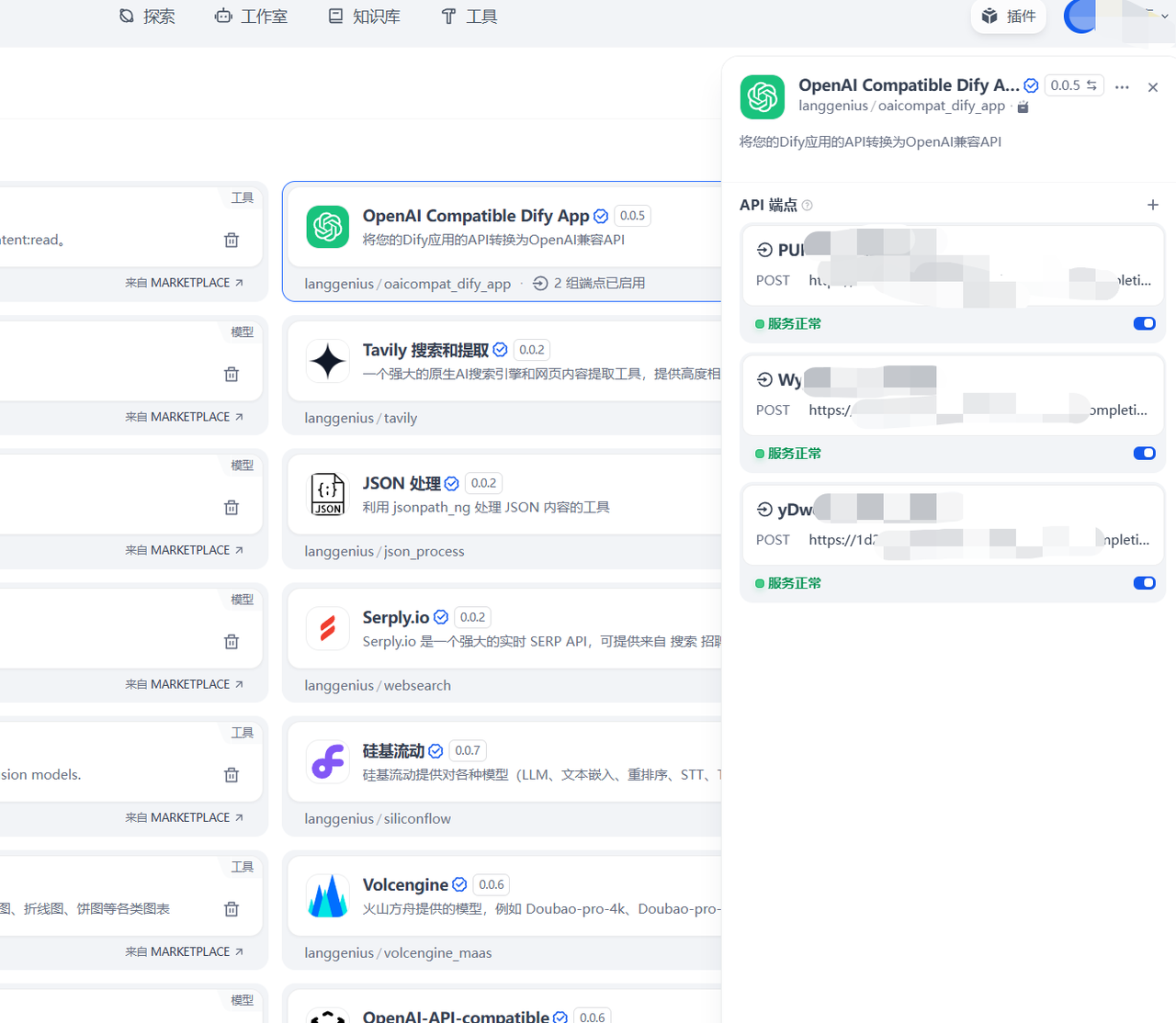

Implementing Dify Agent Integration with OpenAI

Private Deployment Model Integration with Dify

For privately deployed models compatible with the OpenAI interface, they can be called by installing the OpenAI-API-compatible component in the Dify tool. During model invocation, precise configuration of model parameters is required, such as model type, Completion mode, model context length, maximum token limit, Function calling, and other options, ensuring compatibility with the target model’s characteristics and usage requirements. After completing the configuration and saving settings, models or agent applications conforming to the OpenAI interface specification can be integrated.

Reverse Integration of Dify Agent Applications with OpenAI

In the Dify platform’s plugin options, search for and install the OpenAI Compatible Dify App, and locate the “Set API Endpoint” function entry.

Dify Model Integration with OpenAI

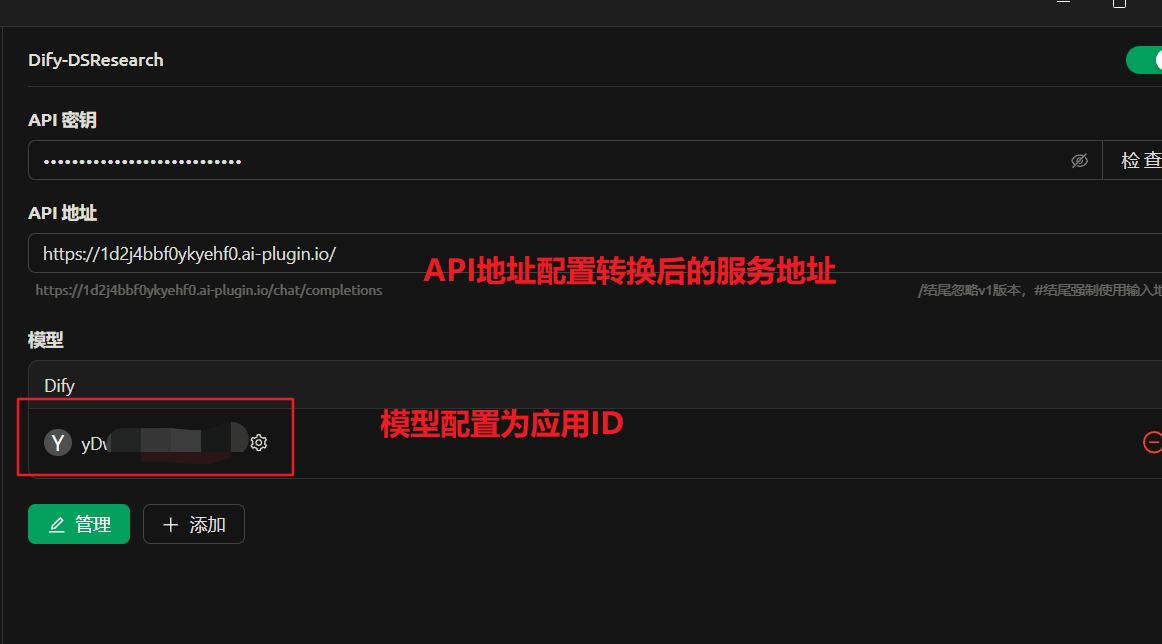

Select the Dify application to be converted to OpenAI compatible API, input the application-generated API Key, and configure the corresponding application ID in the OpenAI Compatible Dify App to make it work properly.

Converting Dify Application to OpenAI Compatible API Dify Application Compatible with OpenAI Services

After configuration, the Dify application’s API will have OpenAI compatibility.

Taking Cherry Studio as an example, by filling in the converted API address and key during the integration process, you can achieve calling the Dify agent application through the OpenAI interface.

Cherry Studio Application Integration with OpenAI Compatible API

The following image shows an example of the integrated agent application after interface conversion, demonstrating normal chat dialogue functionality.

Cherry Studio Application Model Chat Integration

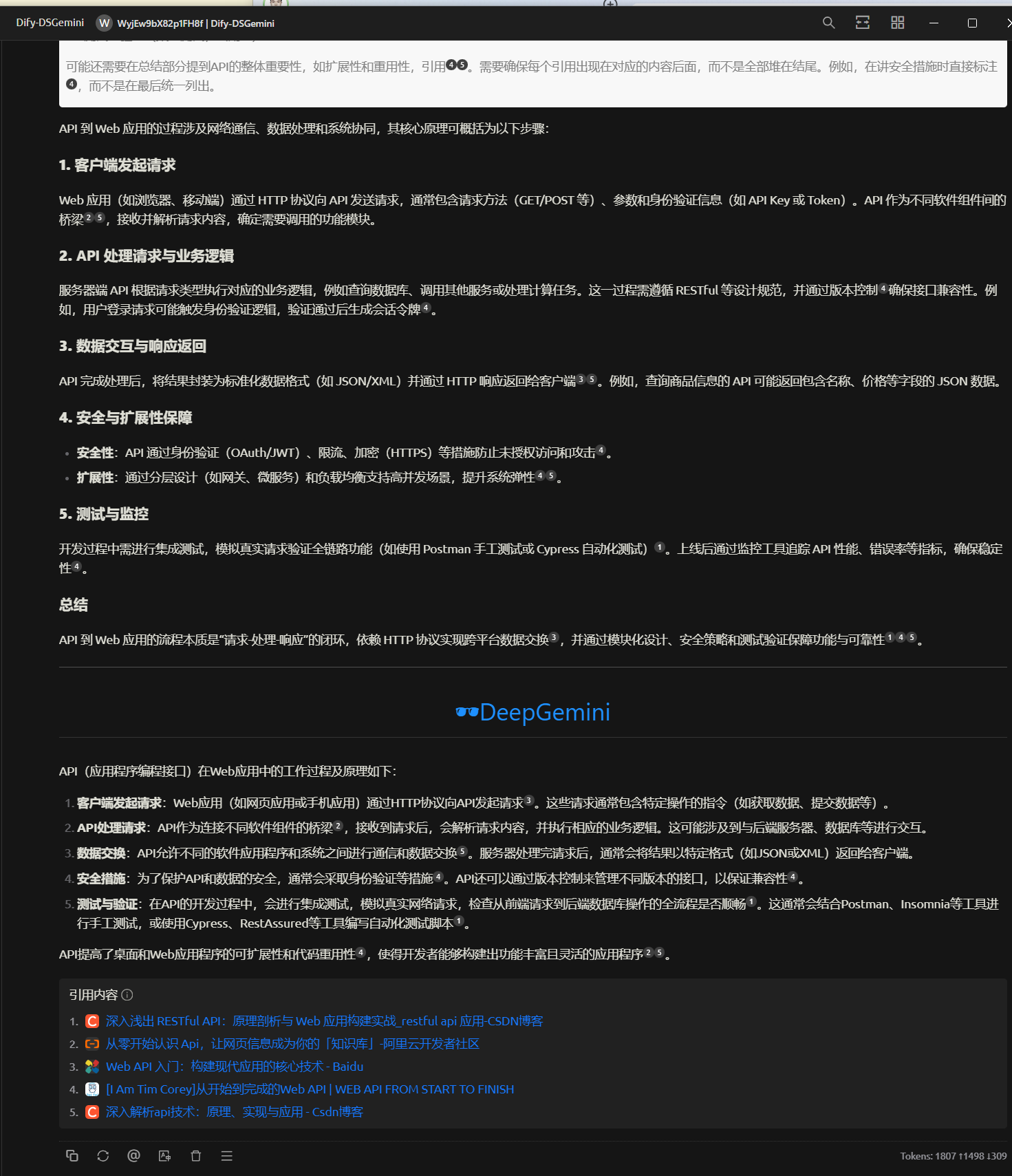

Related Knowledge Point: The Importance of APIs in Agent Applications

In the process of implementing Dify agent integration with OpenAI, APIs (Application Programming Interfaces) play a crucial role. The workflow of APIs in Web applications is based on a “request-process-response” closed-loop mechanism.

• Client Request Initiation: Web applications (such as browser-based or mobile applications) send requests to APIs via HTTP protocol, including request methods (such as GET, POST), parameters, and authentication information (such as API Key or Token). After receiving the request, the API parses it and determines the required functional module to call.

• API Request Processing and Business Logic Execution: The server-side API executes corresponding business logic according to request type and RESTful design specifications, potentially involving database queries, calling other services, or performing complex computational tasks. During processing, version control ensures interface compatibility, such as user login requests triggering authentication logic and generating session tokens after verification.

• Data Interaction and Response Return: After completing business logic processing, the API encapsulates results in standardized data formats (such as JSON or XML) and returns them to the client via HTTP response.

• Security and Scalability Assurance: To ensure API security, measures such as authentication (OAuth, JWT), rate limiting, encryption (HTTPS) are adopted to prevent unauthorized access and malicious attacks. For scalability, layered design (such as gateway, microservice architecture) and load balancing technology support high concurrency scenarios, enhancing system elasticity and scalability.

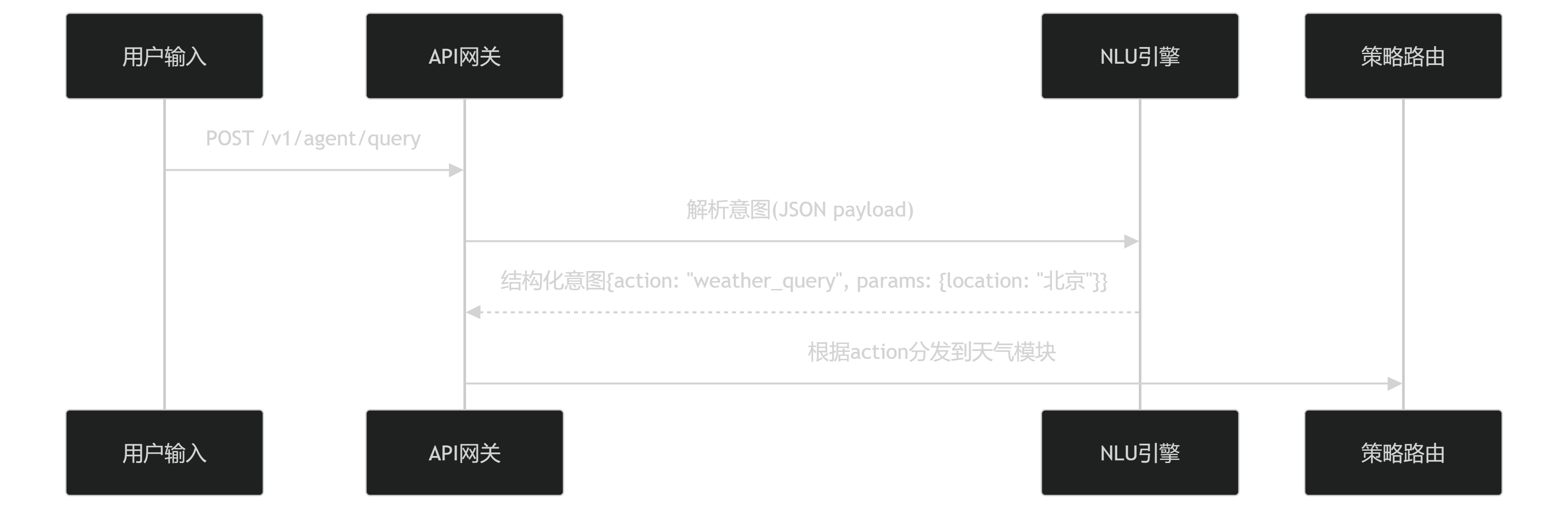

API Design

In building ChatGPT-like agent systems, the API architecture acts as a central nervous system, coordinating the collaborative operation of perception, decision-making, and execution modules. • Protocol Specification: Adopts OpenAPI 3.0 standard to define interfaces, ensuring unified access for multimodal inputs (text/voice/image) • Context Awareness: Implements cross-API call context tracking through X-Session-ID headers, maintaining dialogue state • Dynamic Load Balancing: Automatically switches service endpoints based on real-time monitoring metrics (such as OpenAI API latency) • Heterogeneous Computing Adaptation: Automatically converts request formats to adapt to different AI engines (OpenAI/Anthropic/local models) • Stream Response: Supports progressive result return through Transfer-Encoding: chunked The existence of APIs not only enables communication and data exchange between different software components but also greatly improves the scalability and code reusability of desktop and Web applications, making it a core technology for building modern efficient applications.

Conclusion

Through the above operations, we have achieved the integration of multiple models on the Dify platform and their connection with the OpenAI interface. This technical solution provides a feasible path for building efficient, intelligent chat applications, and we hope it can provide valuable reference for relevant technical personnel and developers. Building on this foundation, further exploration of chat application interface interconnection and functionality enhancement with other agents has broad prospects. For example, implementing collaborative work between different agents and dynamically allocating model resources according to task requirements promises to provide users with more personalized and intelligent service experiences. Continuous research and innovation in this field will promote the application and development of natural language processing technology in more scenarios.

Future Prospects:

- Implement collaborative work between different agents

- Dynamically allocate model resources according to task requirements

- Provide more personalized and intelligent service experiences

GitHub Copilot VSCode 1.98: Raising the Bar in the AI Coding Assistant Race

The AI coding assistant landscape is heating up, and GitHub Copilot’s latest update for Visual Studio Code is a clear response to the growing competition from tools like Cursor AI. With the release of version 1.98, Copilot isn’t just keeping pace - it’s pushing the boundaries of what AI-assisted development can achieve.

This update comes at a pivotal moment in the AI coding assistant space. As highlighted in recent comparisons between GitHub Copilot and Cursor AI, the competition is driving rapid innovation. While Cursor AI has gained attention for its seamless IDE integration and context-aware suggestions, Copilot is countering with a suite of powerful new features that redefine developer productivity.

The AI Assistant Arms Race: Copilot’s Response

The battle between AI coding assistants has been a boon for developers everywhere. Cursor AI’s emergence as a strong competitor has clearly pushed GitHub Copilot to innovate faster. Version 1.98 introduces several features that directly address areas where Cursor AI has been making waves:

1. Agent Mode: The Autonomous Coding Assistant (Preview)

Taking a page from Cursor AI’s playbook, Copilot now offers enhanced autonomous capabilities. Agent Mode represents a significant leap forward, allowing Copilot to:

- Search your workspace for relevant context

- Edit files with precision

- Run terminal commands (with explicit permission)

- Complete tasks end-to-end

The improvements in Agent Mode UX are particularly noteworthy:

- Inline terminal command display for better transparency

- Editable terminal commands before execution

- ⌘Enter shortcut for quick command confirmation

2. Next Edit Suggestions: Smarter Code Refactoring (Preview)

The new collapsed mode for Next Edit Suggestions (NES) offers a more streamlined experience:

- Suggestions appear only in the left editor margin

- Code suggestions reveal upon navigation

- Consecutive suggestions show immediately after acceptance

3. Notebook Support: Data Science Meets AI (Preview)

Copilot’s new notebook support brings AI assistance to data science workflows:

- Create and modify notebooks from scratch

- Edit content across multiple cells

- Insert, delete, and change cell types seamlessly

Custom Instructions: Tailoring Copilot to Your Workflow (GA)

The general availability of custom instructions marks a significant milestone:

- Use .github/copilot-instructions.md for team-specific guidance

- Enable via github.copilot.chat.codeGeneration.useInstructionFiles setting

- Maintain consistent coding standards across projects

Model Selection: Powering Up with GPT 4.5 and Claude 3.7 Sonnet

Copilot’s expanded model selection offers unprecedented flexibility:

- GPT 4.5 (Preview): OpenAI’s latest powerhouse

- Claude 3.7 Sonnet (Preview): Enhanced agentic capabilities

- Model-specific features for specialized tasks

Vision Support: Seeing is Coding (Preview)

The new vision capabilities open up exciting possibilities:

- Attach images for debugging assistance

- Implement UI mockups with AI-generated code

- Multiple attachment methods (drag & drop, clipboard, screenshot)

Image attachments in chat

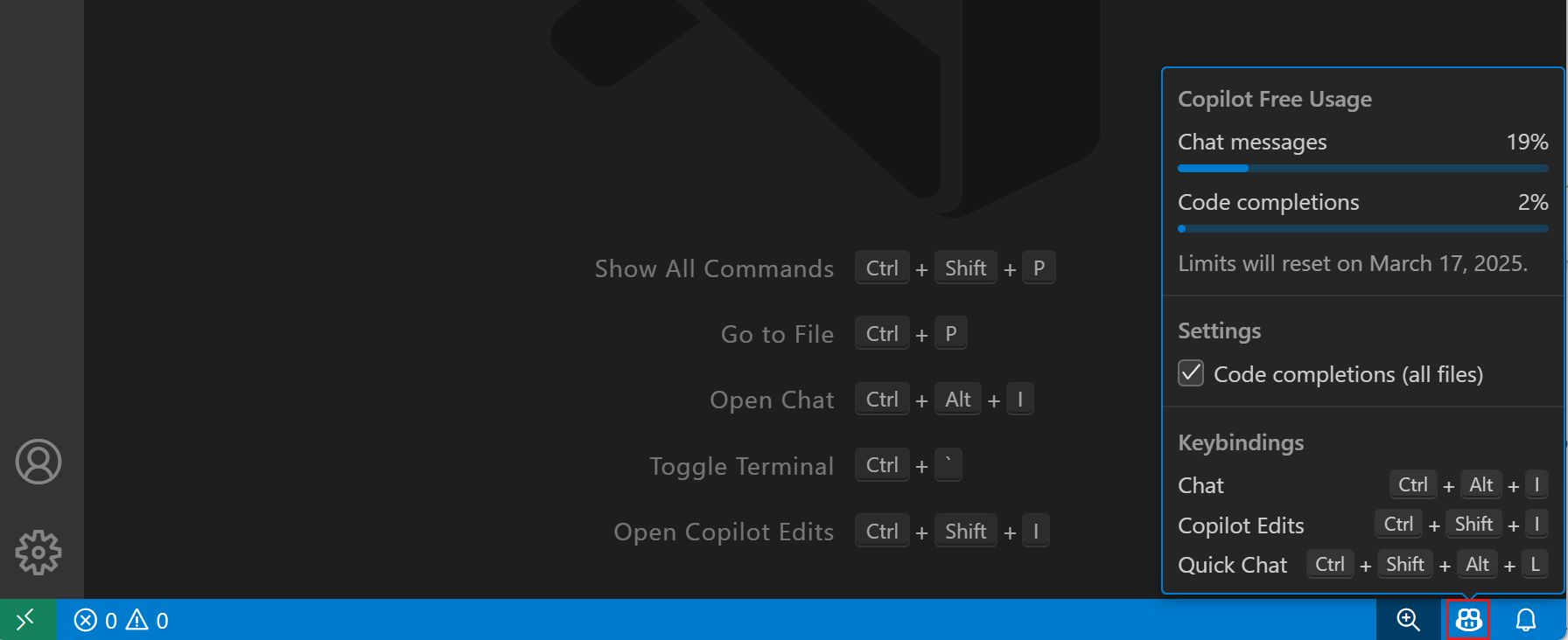

Copilot Status Overview: At-a-Glance Insights (Experimental)

The new status overview provides:

- Quota information for free users

- Key editor settings visibility

- Quick access to Copilot features

Copilot status indicator in the status bar

The Future of AI-Assisted Development

This update demonstrates GitHub Copilot’s commitment to staying at the forefront of AI-assisted development. While the competition with Cursor AI continues to drive innovation, Copilot’s latest features show that it’s not just keeping up - it’s setting new standards for what developers can expect from their AI coding assistants.

As the AI coding assistant landscape evolves, one thing is clear: developers are the ultimate winners in this race. Whether you’re team Copilot, team Cursor, or using both, these tools are transforming how we write code, solve problems, and think about software development.